Introduction about FastAI

First in a series on understanding FastAI.

FasiAI is a deep learning library which provides practitioners with high-level components that can quickly and easily provide state-of-the-art results in standard deep learning domains, and low-level components that can be mixed and matched to build new approaches.

FastAI libraries include:

- a dispatch system for Python along with a semantic type hierarchy for tensors

- a GPU-optimized computer vision library

- an optimizer which refactors out the common functionality of modern optimizers into two basic pieces, allowing optimization algorithms to be implemented in 4-5 lines of code.

- a novel 2-way callback system that can access any part of the data, model, or optimizer and change it at any point during training

- a new data block API

The design of FastAI follows layered structure where we want the clarity and development speed of Keras and the customizability of PyTorch, which is not possible to be achieved both for the other frameworks.

FastAI was co-founded by Jeremy Howard, who is a data scientist, researcher, developer, educator, and entrepreneur, and Rachel Thomas, who is a professor of practice at Queensland University of Technology.

Let's go deeper to their FastAI codes to see how it works. Here is an example of how to fine-tune an ImageNet model on the Oxford IIT Pets dataset and achieve close to state-of-the-art accuracy within a couple of minutes of training on a single GPU.

from fastai.vision.all import *

path = untar_data(URLs.PETS)/'images'

search_images_bing

def is_cat(x):

return x[0].isupper()

dls = ImageDataLoaders.from_name_func(path,

get_image_files(path),

valid_pct= 0.2,

seed= 42,

label_func= is_cat,

item_tfms= Resize(224))

learn = cnn_learner(dls, resnet34, metrics= error_rate)

learn.fine_tune(1)

Each line of given code does one important task:

- The second line (path = untar_data(URLs.PETS)/'images') downloads a standard dataset from the fast.ai datasets collection (if not previously downloaded) to a configurable location (~/.fastai/data by default), extracts it (if not previously extracted), and returns a pathlib.Path object with the extracted location.

- Then, dls=ImageDataLoaders.from_name_func(...) sets up the DataLoaders object and represents a combination of training and validation data.

After defining DataLoaders object, we can easily look at the data with a single line of code.

dls.show_batch()

Let's analyze the parameters inside the ImageDataLoader:

-

valid_pctis the percentage of validation set compared to training set to avoid over-fitting. By defaults,valid_pct=0.2. As being quoted by Jeremy "Overfitting is the single most important and challenging issue. It is easy to create a model that does a great job at making predictions on the exact data it has been trained on, but it is much harder to make accurate predictions on data the model has never seen before."

Afterwards, we created a Learner, which provides an abstraction combining an optimizer, a model, and the data to train. THis line of code learn = cnn_learner(dls, resnet34, metrics= error_rate) will download an ImageNet-pretrained model, if not already available, remove the classification head of the model, and set appropriate defaults for the optimizer, weight decay, learning rate and so forth.

Basically, a Learner contains our data (i.e dls), architecture (i.e. resnet34) which is a mathematical function that we are optimizing and a metrics (i.e, error_rate). a Learner will figure out which are the best parameters for the architecture to match the label in the dataset.

When we are talking about the metrics, which is a function that measures the quality of the model’s predictions using the validation set, it should be noted that the metrics is not necessarily the same as loss. The loss measures how parameters changing influences the results of performances (better or worse).

To fits the model, the next line of code learn.fine_tune(1) tells us how to do. Model fitting is simply looking at how many times to look at each image (epochs). Instead of using fit, we use fine_tune method because we started with a pre-trained model and we don' want to throw away all the capabilities that it already has. By performing fine_tune, the parameters of a pretrained model are updated by training for additional epochs using a different task to that used for pretraining.

In sums, fine_tune is used for transfer learning, in which we used a pretrained model for a task different to what is was originally trained for.

To train our model with images, the first thing we should consider is the sizes of image inputs, because we don't feed the model one image at a time but a several of them (mini-batch ). To group them in a big array (usually called a tensor )) that is going to go through our model, they all need to be of the same size. In FastAI, the size modification of each single image, category is done via Item transform), for example Resize() function.

The, a mini-batch of items will be ready to be fed to GPU via DataLoaders Class. By default, fastai will give us 64 items at a time, all

stacked up into a single tensor.

Instead of Resize, RandomResizeCrop is also super popupar since it change how we look at the same image differently on each epoch and it is a simple technique to avoid overfitting.

Data augmentation refers to creating random variations of our input data, such that they appear different but do not change the meaning of the data. One of the best way to do data augmentation is to use </i> aug_transform()</i>. It will return a list of different augmentation (e.g: contrast, bright , rotation etc).

It should be noted that the data augmentation is applied into a batch of equally sized items. So, we can apply these augmentations to an entire batch of them using GPU.

In this part, we will enlighten the role of

Firstly, we will download the well known MNIST dataset using fastAI

path = untar_data(URLs.MNIST_SAMPLE)

Then, we will look at the train folder which contains image digits of '3' and '7'.

threes=(path/'train'/'3').ls().sorted()

sevens=(path/'train'/'7').ls().sorted()

threes

Let's look at one particular handwriting image in the '7' folder

im7_path = sevens[1]

im7=Image.open(im7_path)

im7

To transform an image into a numeric value, we can use array method which is a part of Numpy array. For instance, to show a few numbers from the image:

array(im7)[7:16,8:16]

tensor(im7)[7:16,8:16]

The beauty of using pytorch tensor over Numpy array is that the calculation of Pytorch tensor can be done in GPU.

Evenmore, we can use Panda to represent numeric values of an image because it has a very convenient thing which is so-called background_gradient that turn the background into gradient

im7_t = tensor(im7)

df=pd.DataFrame(im7_t[7:20,7:20])

df.style.set_properties(**{'font-size':'6pt'}).background_gradient('Greys')

As we can see, the background white pixels are stored as the number zero, black is 255, and shaded grey are something in between. In MNIST dataset, an entire image contains 28 pixels across and 28 pixels down, for a total of 768 pixels.

In the next mission, we will be going to create a model which help us to recognize '3' and '7'

First, we will create a list of all sevens and threes images by using tensor.

seven_tensors = [tensor(Image.open(o)) for o in sevens]

three_tensors = [tensor(Image.open(o)) for o in threes]

len(three_tensors),len(seven_tensors)

show_image(three_tensors[2])

three_tensors[2].shape

Then, we use Machine Learning approach that is described by Dr.Samuel to solve the differentiation problem:

</i> "Suppose we arrange for some automatic means of testing the effectiveness of any current weight assignment in terms of actual performance and provide a mechanism for altering the weight assignment so as to maximize the performance. We need not go into the details of such a procedure to see that it could be made entirely automatic and to see that a machine so programmed would “learn” from its experience." </i>

So, lets think about a function with parameter. Instead of finding an ideal image and compare every single image with an ideal image, we come up with a weight for each pixel of the image. The accumulated information of the weighted pixels gives us valuable information to differentiate between images.

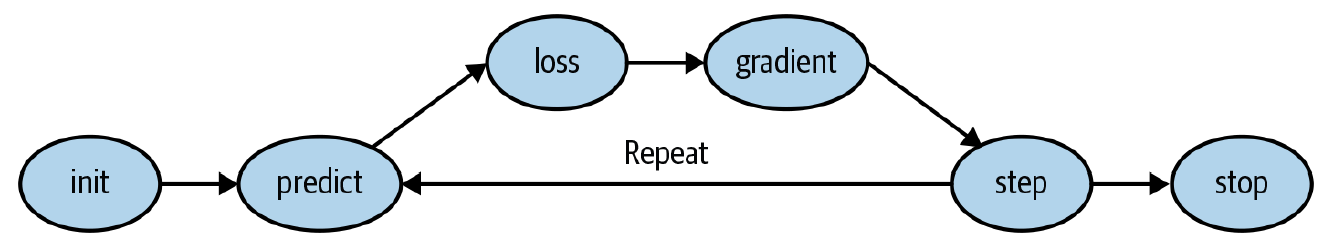

To be more specific, we will build a Machine Learning classifier according to the following steps:

- Initialize the weights.

- For each image, use these weights to predict whether it appears to be a 3 or a 7.

- Based on these predictions, calculate how good the model is (its loss).

- Calculate the gradient, which measures for each weight how changing that weight would change the loss.

- Step (that is, change) all the weights based on that calculation.

- Go back to step 2 and repeat the process.

- Iterate until you decide to stop the training process (for instance, because the model is good enough or you don’t want to wait any longer).

Pytorch has a built-in engine that helps us to calculate gradient effeciently and simply. In order to do this, we start with a tensor and it comes up with a special method requires_grad()_. The purpose of using this method is when we perform any calculation on a tensor, it will remember that calculation it does so that we can take the derivatives later.

Then we will call a special method backward which refers to the back propagation and do the derivative for us.

Afterwards, we can view the gradients by checking the grad attribute of our tensor.

def f(x): return x**2

xt=tensor(3.).requires_grad_()

xt

yt=f(xt)

yt

yt.backward()

xt.grad

Deciding to change our parameter based on the value of the gradients is an important part. Gradient tells us the slop of a function, but does not tell us exactly how far to adjust our parameters. That'ss where the learning rate appears.

w -= gradient(w) * lrThe update of the parameters will be inversed to the gradient and is multiplied by a learning rate. If we take a learning rate is too small, it will be needing more time for our algorithm to converge. If we take a large learning rate, it can results in the loss getting even worse. So picking up a good learning rate is really important.

Getting back to the MNIST, we need gradient to improve our model using SGD. In order to calculate gradient, we need some loss function that represent us how good our model is.

# stack the tensor together

stacked_sevens = torch.stack(seven_tensors).float()/255

stacked_threes = torch.stack(three_tensors).float()/255

# create the items and labels

train_x = torch.cat([stacked_threes, stacked_sevens]).view(-1, 28*28)

train_y = tensor([1]*len(threes) + [0]*len(sevens)).unsqueeze(1)

train_x.shape,train_y.shape

# create dataset

dset = list(zip(train_x,train_y))

valid_3_tens = torch.stack([tensor(Image.open(o))

for o in (path/'valid'/'3').ls()])

valid_3_tens = valid_3_tens.float()/255

valid_7_tens = torch.stack([tensor(Image.open(o))

for o in (path/'valid'/'7').ls()])

valid_7_tens = valid_7_tens.float()/255

valid_3_tens.shape,valid_7_tens.shape

valid_x = torch.cat([valid_3_tens, valid_7_tens]).view(-1, 28*28)

valid_y = tensor([1]*len(valid_3_tens) + [0]*len(valid_7_tens)).unsqueeze(1)

valid_dset = list(zip(valid_x,valid_y))

def init_params(size,std=1.0): return (torch.randn(size)*std).requires_grad_()

weights=init_params((28*28,1))

bias = init_params(1)

We can now calculate a prediction for one image

(train_x[0]*weights.T).sum() + bias

By utilising the power of a GPU, we can predict a set of images by using matrix multiplication to loop between the pixels of an image and between images

def linear(xb): return xb@weights + bias

preds = linear(train_x)

preds

To check out the accuracy, to decide if an output represents a 3 or 7, we can simply apply binary method for that. if the output is greater than 0, it represent a 3 and vice versa.

corrects = (preds>0.0).float() == train_y

corrects

Notice that we can not apply the accuracy for the loss function here because a small changes of paramters does not lead to the significant changes of the results, so we need to build a new loss function to estimate the prediction. The following function will give a first try of measuring the distance between predictions and targets:

def mnist_loss(predictions,targets):

return torch.where(targets==1,1-predictions,predictions).mean()

One problem with mnist_loss is that it assumes the predictions are always between 0 and 1. Then, we need to ensure that it is always the case. That's the place for the activation function - Sigmoid function

def sigmoid(x): return 1/(1+torch.exp(-x))

plot_function(torch.sigmoid, title='Sigmoid',min=-4,max=4)

Then, let's update mnist_loss with sigmoid function:

def mnist_loss(predictions,targets):

predictions=predictions.sigmoid()

return torch.where(targets==1,1-predictions,predictions).mean()

SGD and mini-batches

In order to change or update the weight, we will walk through the step method (optimisation step). To take an optimiser step we need to calculate the loss over one or more data items. If we perform the optimisation step for every single item, it would take a very long time. On the other hand, if we perform the step for the whole data set at once, it could return an unstable gradient.

So, we will take a compromise between the two: we calculate the average loss for a few data items at a time(mini-batches). The number of data items in a batch is call batch size . A larger batch size means you will get more accurate and stable estimation of our dataset's gradienton the loss function, but it will take longer and you will get less mini-batches per epoch. Then, choosing a good batch size is one of the decision that is to help deep learning to train our model quickly and accurately.

In Pytorch and fastAI, there is a class that will do the shuffling and minibatch collation for us, called DataLoader

coll = range(15)

dl = DataLoader(coll,batch_size=5,shuffle=True)

list(dl)

dl = DataLoader(dset,batch_size=256)

valid_dl = DataLoader(valid_dset,batch_size=256)

#

def calc_grad(xb,yb,model):

preds=model(xb)

loss=mnist_loss(preds,yb)

loss.backward()

batch=train_x[:4]

calc_grad(batch,train_y[:4],linear)

weights.grad.mean(),bias.grad

calc_grad(batch,train_y[:4],linear)

weights.grad.mean(),bias.grad

When we will perform the calc_grad twice, although we have not changed anything related to the weights, but the gradient results return different values!!!

The reason for that is that loss.backward actually adds the gradients of loss into any gradients that are currently stored. So we have to set the current gradients to zero first.

weights.grad.zero_()

bias.grad.zero_();

Then, we will update the weights and bias based on the gradient and learning rate.

def train_epoch(model,lr,params):

for xb,yb in dl:

calc_grad(xb,yb,model)

for p in params:

p.data -= p.grad*lr

p.grad.zero_()

(preds>0.0).float() == train_y

Then, we calculate the accuracy

def batch_accuracy(xb,yb):

preds = xb.sigmoid()

corrects=(preds>0.5)==yb

return corrects.float().mean()

batch_accuracy(linear(batch),train_y[:4])

def validate_epoch(model):

accs = [batch_accuracy(model(xb),yb) for xb,yb in valid_dl]

return round(torch.stack(accs).mean().item(),4)

validate_epoch(linear)

Let's train in one epoch

lr = 1.

params=weights,bias

train_epoch(linear,lr,params)

validate_epoch(linear)

for i in range(20):

train_epoch(linear,lr,params)

print(validate_epoch(linear),end=' ')

So, we have succesfully built a SGD optimizer of a simple linear function anf get the accuracy upto 97.94%

Creating an optimizer

In order to automate the initialization of an optimizer, Pytorch provides some useful functions to replace our linear() function with Pytorch's nn.Linear module.

nn.Linear does the same thing as our init_params and Linear together. It contains both weights and bias in a single class.

linear_model = nn.Linear(28*28,1)

w,b = linear_model.parameters()

w.shape,b.shape

The, we can use this information to create an optimizer:

class BasicOptim:

def __init__(self,params,lr): self.params, self.lr= list(params),lr

def step(self,*args,**kwargs):

for p in self.params:

p.data-= p.grad.data*self.lr

def zero_grad(self,*args,**kwargs):

for p in self.params:

p.grad=None

opt = BasicOptim(linear_model.parameters(),lr)

Then, the new training loop should be:

def train_epoch(model):

for xb,yb in dl:

calc_grad(xb,yb,model)

opt.step()

opt.zero_grad()

def train_model(model,epochs):

for i in range(epochs):

train_epoch(model)

print(validate_epoch(model), end=' ')

train_model(linear_model,30)

In FastAI, it provides us an API of SGD class which does the same thing as BasicOptim

linear_model = nn.Linear(28*28,1)

opt = SGD(linear_model.parameters(),lr)

train_model(linear_model,30)

FastAI also provides Learner.fit, which we can use instead of train_model. To create a Learner we first need to create DataLoader, by passing in our training and validation DataLoaders.

dls=DataLoaders(dl,valid_dl)

learn=Learner(dls,nn.Linear(28*28,1),opt_func=SGD,loss_func=mnist_loss,metrics=batch_accuracy)

learn.fit(10,lr=lr)

def simple_net(xb):

s1=xb@w1+b1

res=s1.max(tensor(0.0))

s2=res@w2+b2

return s2

The little s1.max(tensor(0.0)) is called rectified linear unit (RELU). In Pytorch, it is also available as F.relu

plot_function(F.relu)

The addition of nonlinear function, we can provide what Universal Approximation Theorem says, which can represent any arbitrary function.

We can replace the initialization of the basic neural network by taking advantage of Pytorch:

simple_net = nn.Sequential(

nn.Linear(28*28,30),

nn.ReLU(),

nn.Linear(30,1)

)

nn.Sequential is a module that calls each of the listed layers or function in turn.

learn=Learner(dls,simple_net,opt_func=SGD,loss_func=mnist_loss,metrics=batch_accuracy)

#hide_output

learn.fit(40,0.1)

plt.plot(L(learn.recorder.values).itemgot(2))

For deeper models, we may need to use a lower learning rate and a few more epochs. In practice, we can freely to set many layers as well as the nonlinearity between layers. However, the deeper the model gets, the harder it is to optimize the parameters in practice. So why we would use a deeper model? The reason is the performance. With the deeper model, it turns out that we can use smaller matrices , with more layers, and get better results that we would get with larger matrices and few layers.

Here is what happens when we train an 18-layer model

dls=ImageDataLoaders.from_folder(path)

learn=cnn_learner(dls,resnet18,pretrained=False, loss_func=F.cross_entropy, metrics=accuracy)

learn.fit_one_cycle(1,0.1)